Powering Sovereign AI Factories and Neoclouds

From Metal to a Production-Ready AI Cloud Service

OpenNebula is a vendor-neutral open platform for building and operating secure, multi-tenant AI factories that deliver AI-as-a-Service environments for HPC centers, Neoclouds and Telcos.

Why OpenNebula?

Cloud-Like Access Model

Interactive, on-demand access to GPU resources and AI Models to run inference, fine-tuning, and training as a service.

HPC/AI and K8s Workload

Run cloud-native workloads on Kubernetes or execute large HPC/AI models directly on passthrough virtualization.

Flexible and Vendor-Neutral

Open, modular, and adaptable to any management software and underlying hardware, giving you full control, customization, and freedom.

Ecosystem Integration

Deploy and manage validated GenAI and LLM frameworks—such as NIM, vLLM, Hugging Face, and other NVIDIA AI tools.

Elevating AI Factories from Metal to Intelligence

Top AI Factory Features Built Into OpenNebula

GPU Partitioning and Sharing

Accelerated Networking (East–West)

DPU-Accelerated Data Paths

Offload networking, storage, and security operations to achieve lower latency and higher throughput.

Secure Multi-Tenancy and Governance

Intelligent Scheduling and Optimization

Confidential Computing and Trust

Protect sensitive AI pipelines with Secure Boot, UEFI, vTPM, and confidential VMs.

Multi-GPU Scaling Capabilities

Heterogeneous Architecture Support

Advanced Performance Management

Want to Evaluate OpenNebula for AI?

Check out our AI Factory Deployment Blueprint – a set of guides to help you deploy a secure, multi-tenant AI Factory with AI-as-a-Service on OpenNebula.

Learn More About AI Factories with OpenNebula

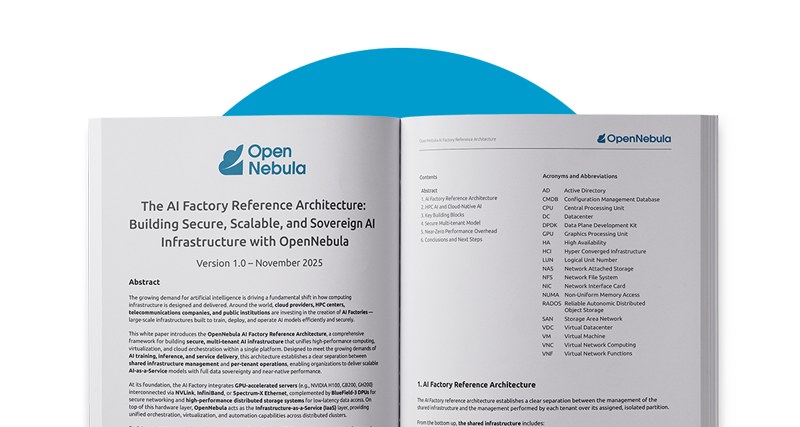

WHITE PAPER

OpenNebula AI Factory Reference Architecture

SCREENCAST

AI Inference on Ampere ARM64 Edge Clusters

Trusted by Industry Leaders

A proven platform, validated by customers, partners, and leading technology ecosystems.

Start Building Your AI Factory Today

Contact us to learn how OpenNebula can help you build AI-ready infrastructure designed to handle AI workloads securely, efficiently, and at scale—ready for hybrid, edge, and multi-cloud deployments.