Earlier this week, the 2nd Workshop on Adapting Applications and Computing Services to Multi-core and Virtualization Technologies was held at CERN, where we presented the lxcloud project and its application for a virtual batch farm. This post provides a fairly technical overview of lxcloud, its use of OpenNebula (ONE), and the cloud we are building at CERN. More details are available in the slides (Part I and Part II) from our presentations at the workshop.

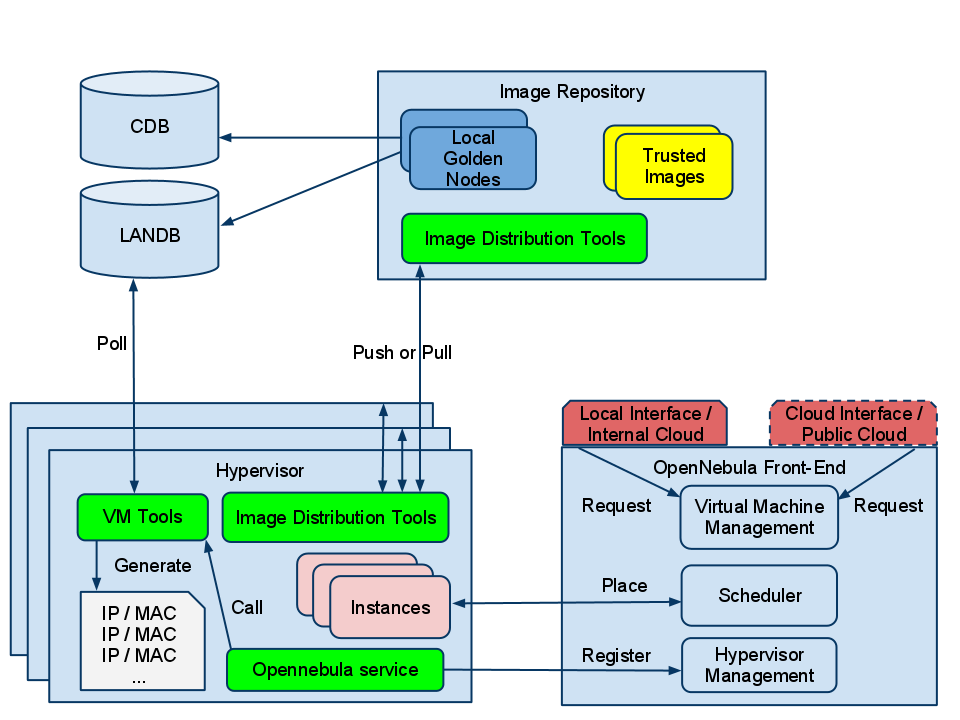

The figure below shows a high level architecture of lxcloud.

Physical resources: The cloud we are currently building at CERN is not a production service and is still being developed and tested for robustness and potential weaknesses in the overall architecture design. Five hundred servers are being used temporarily to perform scaling tests (not only of our virtualization infrastructure but of other services as well), these servers have eight cores and most of them have 24GB RAM and two 500GB disks. They run Scientific Linux CERN (SLC) 5.5 and use Xen. Once KVM becomes more mainstream and CERN moves to SLC6 and beyond, KVM will be used as hypervisor but for now the cloud is 99% Xen. All servers are managed by Quattor.

Networking: The virtual machines provisioned by OpenNebula use a fixed lease file populated with private IP addresses routable within the CERN network. Each IP and corresponding MAC address is stored in the CERN network database (LANDB). Each VM is given a DNS name. To enable auditing, each IP/MAC pair is pinned to a specific host, which means that once a VM obtains a lease from OpenNebula this determines which hosts it is going to run on. This is very static but required for our regular operations. VMs defined in LANDB can be migrated to another hosts using an API, but this has not been worked on so far. The hosts run an init script which polls the list of IP/MAC pairs it is allowed to run from LANDB. This script is run very early in the boot sequence and it is also used to call the OpenNebula XML-RPC server and register the host. This way host registration is automated when the machines boot. A special ONE probe has been developed to check the list of MACs allowed on each host. Once a host registers, the list of MACs is readily available from the ONE frontend. The scheduler can then place a VM on the host that is allowed to run it.

The image repository/distribution: This component comprises a single server that runs virtual machines managed by the Quattor system. These virtual machines are our “golden nodes”; snapshots of these nodes are taken regularly and pushed/pulled on all the hypervisors. CERN does not use a shared file system other than AFS so pre-staging the disk images was needed. Pre-staging the source image of the VM instances allows us to gain a lot of time at image instantiation. The pre-staging can be done via sequential scp or via scp-wave which offers a logarithmic speed-up (very handy when you need to transfer an image to ~500 hosts) or via BitTorrent. The BitTorrent setup is currently being tuned to maximize bandwidth and the time for 100% of the hosts to get the image.

The disk images themselves are gzip files of LVM volumes created with dd (from the disk images of the golden nodes). When the file arrives on a hypervisor, the inverse operation happens: it is gunzipped and dd‘d onto a local LVM volume. Using LVM source images on all the hosts allows us to use the ONE LVM transfer scripts that create snapshots of the image at instantiation. That way instantiation takes only couple seconds. Currently we do not expect to push/pull images very often, but our measurements show that it takes ~20 minutes to transfer an image to ~450 hosts with BitTorrent and ~45 minutes with scp-wave.

OpenNebula: We use the latest development version of ONE, 1.6 with some changes added very recently that allow us to scale to ~8,000 VMs instances on the current prototype infrastructure. As mentioned earlier, the hosts are Xen hosts that auto-register via the XML-RPC server, a special information probe reads the allowed MACs on each host so that the scheduler can pin VMs to a particular host. We use the new OpenNebula MySQL backend which is faster than SQLite when dealing with thousands of VMs. We also use a new scheduler that uses XML-RPC and has solved a lot of database locking issues we were having. As reported in the workshop, we have tested the OpenNebula econe-server successfully and plan to take advantage of it or use the vCloud or OCCI interface. The choice of cloud interface for the users is still to be decided. Our tests have shown that OpenNebula can manage several thousands of VMs fairly routinely and we have pushed it to ~8,000 VMs, with the scheduler dispatching the VMs at ~1VM/sec. This rate is tunable and we are currently trying to increase it. We have not tested the Haizea leasing system yet.

Provisioning: In the case of virtual worker nodes, we drive the provisioning of the VMs making full use of the XML-RPC API. The VMs that we start for the virtual batch farm are replicas of our lxbatch worker nodes (batch cluster at CERN), however they are not managed by Quattor. To make sure that they do not get out of date we define a VM lifetime (passed to the VM via contextualization). When a VM has been drained of its jobs, the VM literally “kills itself” by contacting ONE via XML-RPC and requesting to be shut down. In this way the provisioning only has to take care of filling the pool of VMs and enforcing the pool policies. Overtime the pool adapts and converges towards the correct mix of virtual machines. The VM call back is implemented has a straightforward python script triggered by a cron job.

We hope you found these details interesting,

Sebastien Goasguen (Clemson University and CERN-IT)

Ulrich Schwickerath (CERN-IT)

Have you, or has anyone else, tried to use

HybridFox or ElasticFox web GUI to work

with OpenNebula? Is the ec2 interface

rich enough to make that work?

Steve Timm

According to Sebastian and Ulrich, “The cloud we are currently building at CERN is not a production service and is still being developed and tested for robustness and potential weaknesses”. This is a bit of an oxymoron: if something is robust, it does not have intolerable weaknesses.

Also I am looking at Haizea “leasing system” not yet tested. First a lease request is “I need 5 CPU, 2 cores each and 4GB memory from 10 a.m. until 10 p.m.”

Who is going to make this request? The user? The Sysadmin? Some form of automation? How do we know the resources we order are really what we need, without a Service Level Agreement?

When used with OpenNebula, Haizea acts as a drop-in replacement for OpenNebula’s default scheduler. As such, all requests still have to go through OpenNebula (and Haizea will take care of scheduling them). It is up to the OpenNebula administrator to decide if users can directly request leases, and establishing and enforcing SLAs is left to higher-level components.

Lots of press about Platform Computing being used at CERN as well as OpenNebula. Whats the relations between the two or plans of running 2 parallel clouds?

>According to Sebastian and Ulrich, “The cloud we are currently building at >CERN is not a production service and is still being developed and tested for >robustness and potential weaknesses”. This is a bit of an oxymoron: if >something is robust, it does not have intolerable weaknesses.

If you read the sentence correctly, you would understand that they were *testing* the robustness of the service. They never stated in this post that the service *was* robust.

The whole point of this is to discover what is causing any potential issues or weaknesses and to then fix the problems so the system will *then* be a robust system.

You are right on the oxymoron.

The bottom line is that these tests were done back in Opennebula 1.8/1.9 and led to changes in 2.0. We worked closely with the developers and they made changes to the developers and the scalability of the db as well as the clients.

We managed succcessfull deployment of ~16000 virtual machines. Currently the system is in production with ~200 VMs running non stop (Ulrich would no the exact number).

The system is now extremely robust and I do not see any weaknesses. As a matter of fact a lot of new features are now present in 3.0 (VDC, x.509, accounting..etc)