LXD system containers differ from application containers (e.g. those managed by Docker, RKT, or even by LXC) in the target workload. LXD containers are meant to provide a highly efficient alternative to a full virtual machine. They contain a full OS with its own init process and are designed to provide the same experience as a VM. Docker containers are meant to run a single application process inside a confined and well-defined runtime environment. However, both of them use a similar technology under the hood. LXD uses LXC and also Docker, since Docker moved from LXC to its own implementation in 2014. Essentially, the difference lies in the way the container orchestrator handles how the containers are run, and the interaction between them and the host they are running on.

Being able to provision both types of workloads certainly brings some real benefits to a cloud platform, and that is why OpenNebula incorporated support to LXD in version 5.8 “Edge”. OpenNebula can create LXD containers running on bare metal, but, in the case of Docker, the compatibility is done through Docker Machine (available at the project’s marketplace as a KVM virtual appliance). This puts a halt to the classic near bare metal performance of containers due to having to go through full virtualization in the KVM appliance. With LXD, and being capable of running Docker, you can avoid that loss.

If you want to learn more details about the interaction of Docker over LXD, you can read this post by Stéphane Graber, LXD technical lead at Canonical Ltd, and also have a look at LXD’s FAQ section. We at OpenNebula will leverage both container management systems in order to orchestrate apps: Docker (as a means of application distribution) and LXD (as a convenient execution environment, fast, isolated and directly integrated into the OpenNebula software stack). The app deployment will be managed by OpenNebula’s START_SCRIPT feature, available to contextualized images.

This solution provides 3 runtime levels:

- OpenNebula LXD node

- OpenNebula LXD container

- Docker App container

In this post we will deploy nginx with OpenNebula using a container from the LXD marketplace. An internet connection is required, for both Docker Hub and the LXD marketplace, as well as a LXD node.

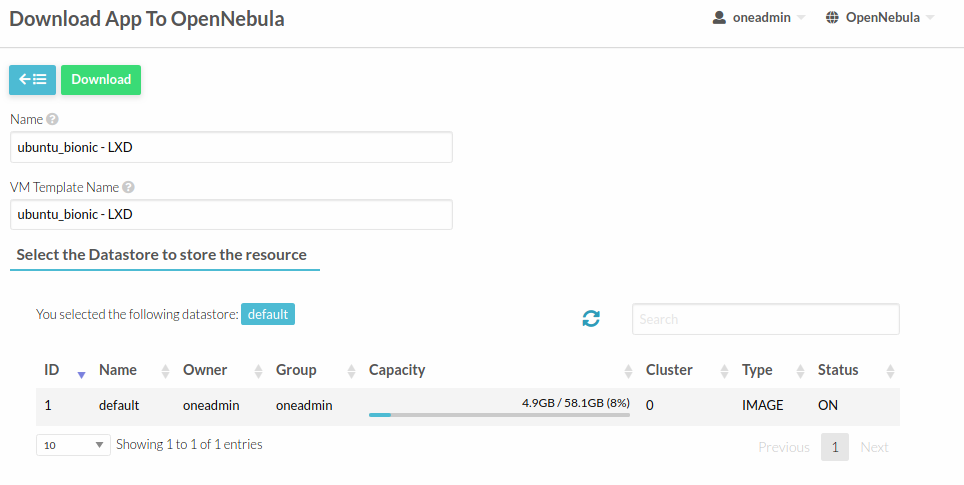

1) Download ubuntu_bionic – LXD from the LXD marketplace

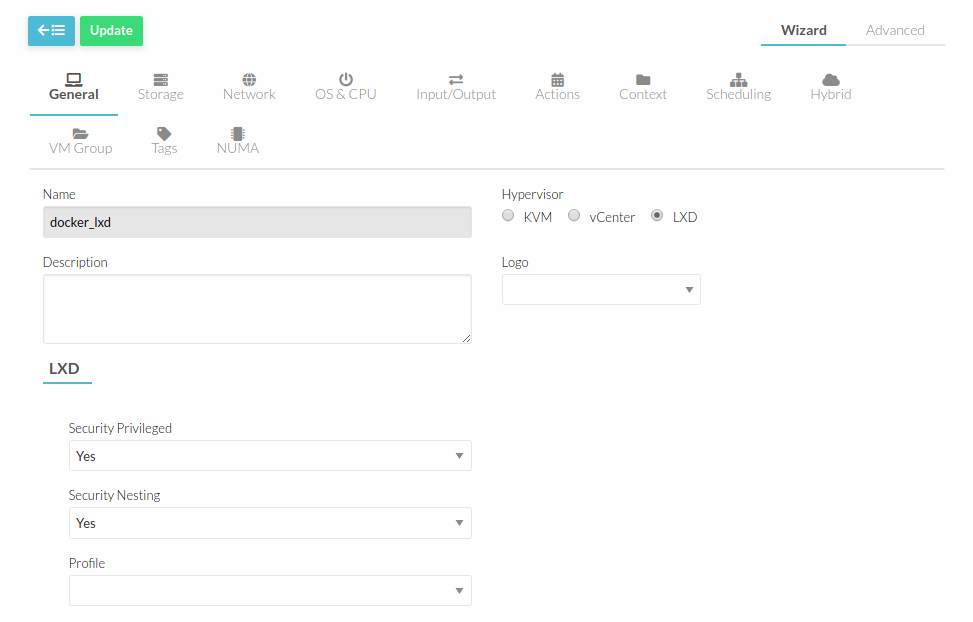

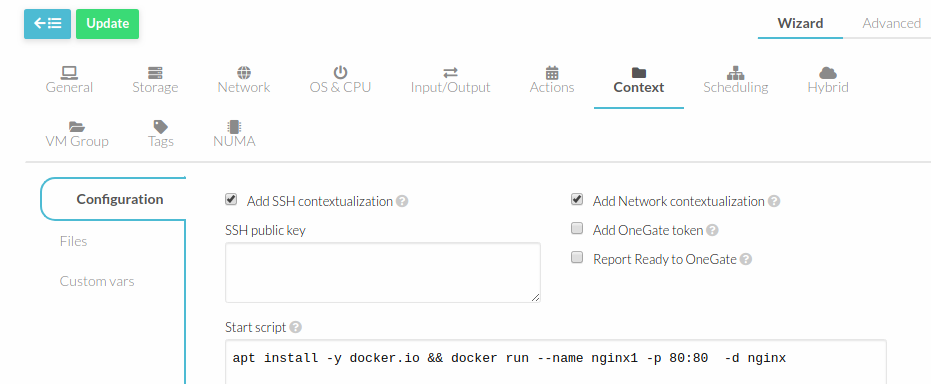

2) Update the VM template

- Add a public network interface

- Set LXD_SECURITY_NESTING to yes

- Add a network with internet access

- Add a

START_SCRIPT

Keep in mind that you can create a dedicated template for running Docker containers in order to avoid installing docker.io every time you want to run an app. This will save a lot of time! 😉

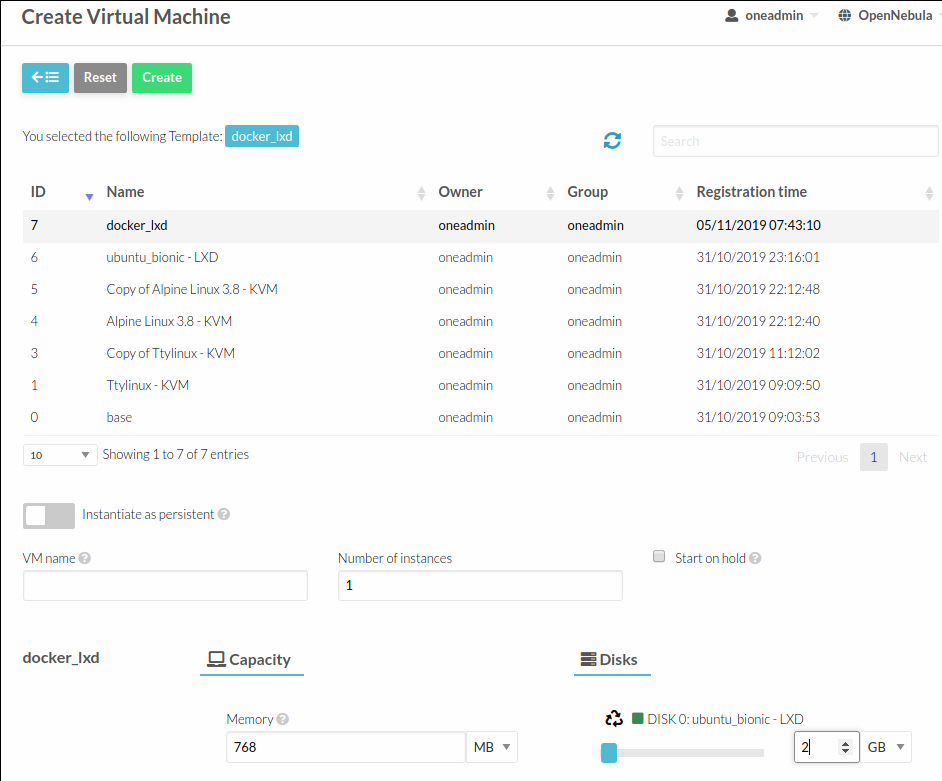

3) Deploy the VM

Extend the image size before deploying (the default 1GB isn’t enough)..

4) Voilà!

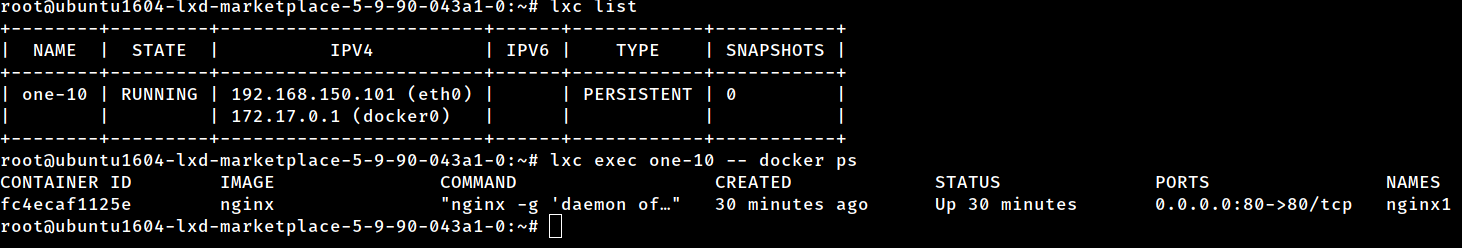

After a while you’ll see a Docker container running nginx inside a LXD container:

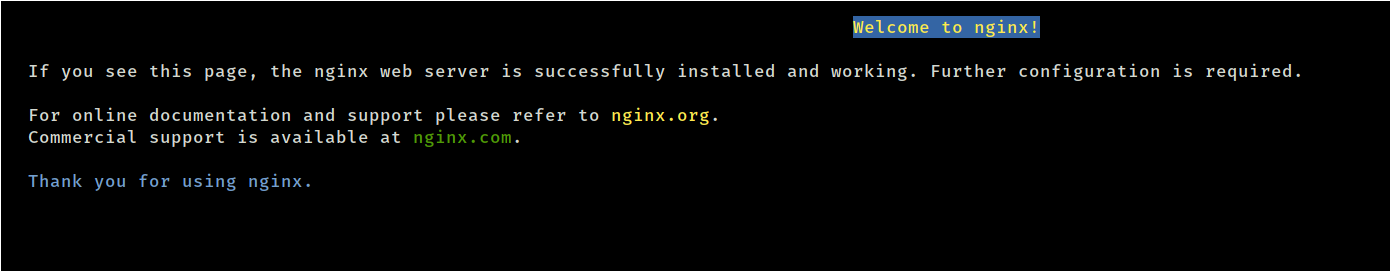

Now, install lynx or your favorite browser and take a look at nginx (e.g. lynx 192.168.150.101)

You can run as many app containers as you want per LXD container, but remember: LXD needs a docker.io setup, thus adding an extra storage overhead, which tends to be more neglectable as the number of apps increases per LXD container increases.

Hope you have enjoyed this post, and don’t forget to leave us your comments below!

LXDoNe is an addon for OpenNebula to manage LXD Containers. It fits in the Virtualization and Monitorization Driver section according to OpenNebula’s Architecture. It uses the pylxd API for several container tasks. This addon is the continuation of LXCoNe, an addon for LXC. Check the blog entry in OpenNebula official site.

That is the intro of https://github.com/OpenNebula/addon-lxdone. Not related to this post.

Hello, what about to use docker-machine driver and instantiate LXD instead on KVM? I think, it should work.

It is a different approach, somewhat simpler. This method allows to directly manage docker containers from the OpenNebula frontend.