A new set of enhancements for the virtualization stack of OpenNebula is coming with 5.10. Virtual Machines are now able to be deployed with a fine-tailored compute resources topology, gaining performance and security benefits. Most of the enhancements are applied to KVM, however LXD is getting minor updates as well.

Features

- A VM will now allow for a fine-grained CPU topology by specifying the cores per socket, threads per core and sockets.

- A new NUMA-aware scheduler will be available for pinning VM onto a host-specific NUMA node, cores or CPU threads, according to different policies.

- Hugepages can be used to improve the performance on VMs.

Setting up the virtualization nodes

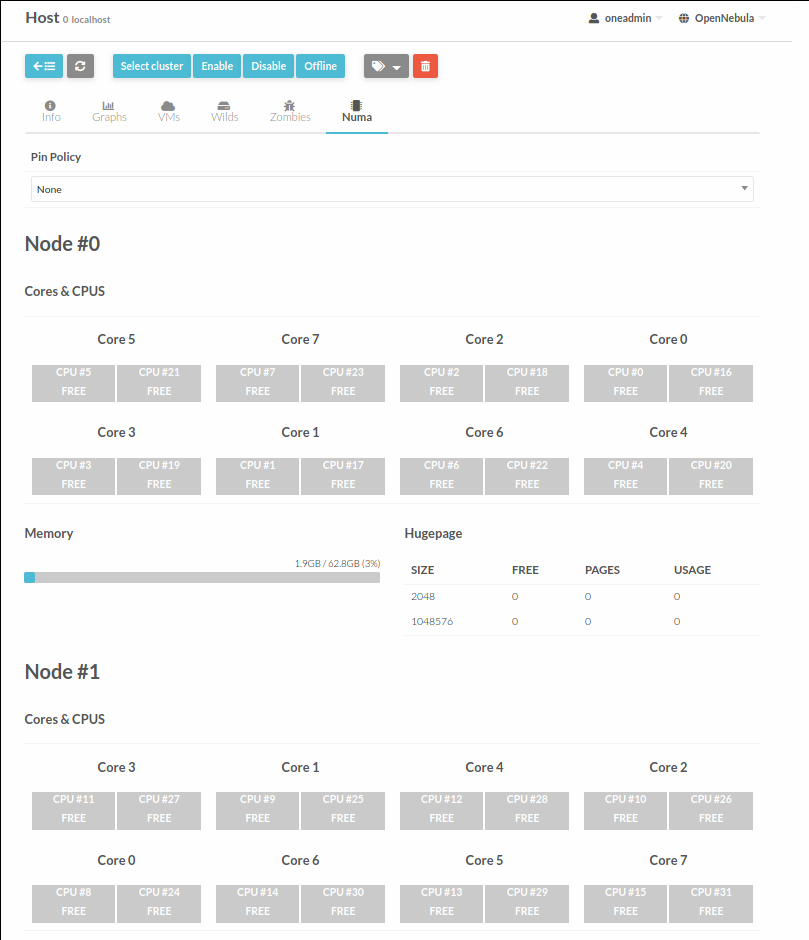

Hosts will now have an extra field. In order to be able to use the new features, you’ll need to update the PIN_POLICY attribute to “Pinned”.

The VM Scheduler is being updated as well and, when a host is marked as Pinned, it will only be used by VM templates with an advanced compute resources topology. Also every core and NUMA node is a resource upon which the tuned VMs can be deployed.

Testing the new features…

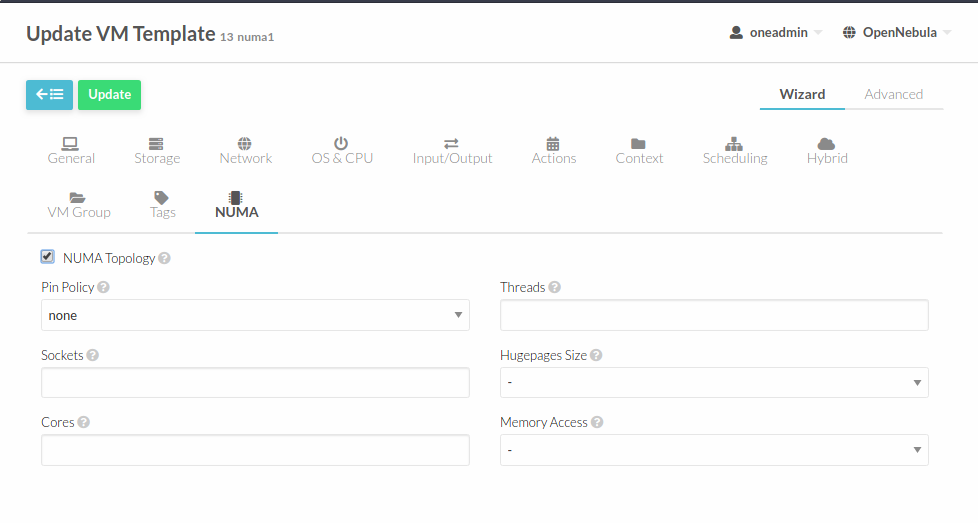

We will create a VM with CPU pinning and hugepages. First you will need to enable hugepages on your OS. In this case we will use Ubuntu 18.04. The VM will have 2 sockets, 2 cores and 2 threads per core.

These are the template attributes for the VM:

TEMPLATE CONTENTS

CONTEXT=[

NETWORK="YES",

SSH_PUBLIC_KEY="$USER[SSH_PUBLIC_KEY]" ]

CPU="1"

DISK=[

IMAGE_ID="0" ]

GRAPHICS=[

LISTEN="0.0.0.0",

TYPE="VNC" ]

INPUTS_ORDER=""

LOGO="images/logos/linux.png"

MEMORY="128"

MEMORY_UNIT_COST="MB"

OS=[

ARCH="x86_64",

BOOT="" ]

TOPOLOGY=[

CORES="2",

HUGEPAGE_SIZE="2",

PIN_POLICY="THREAD",

SOCKETS="2",

THREADS="2" ]

VCPU="8"

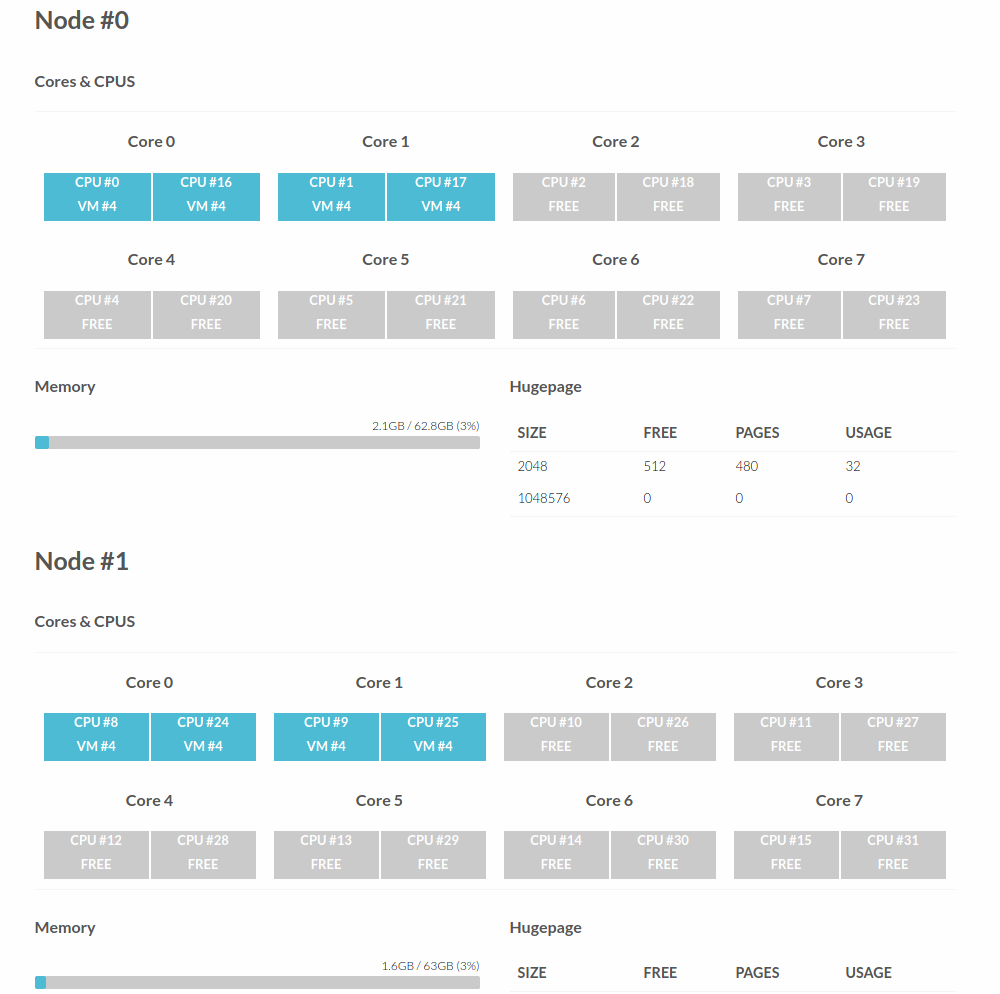

Once the VM is running, we’ll be able to check the allocation in the host, in this case the virtual node 0 of the VM has been pinned in the host node 0 (CPUs 0, 16, 1 and 17); and the virtual node 1 to the physical NUMA node 1 (CPUs 8, 24, 9 and 25).

Also, take a look at the memory being halved for each node and being allocated from the available hugepages in the hypervisor.

root@numa:~# cat /proc/meminfo | grep Huge

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

HugePages_Total: 1024

HugePages_Free: 960

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

If you want to test this code and provide feedback it is already in the master branch for 5.10. In addition, the documentation with a detailed explanation is live.

0 Comments