Following our series of posts about OpenNebula and Docker, we would like to showcase the use of Docker Swarm with OpenNebula.

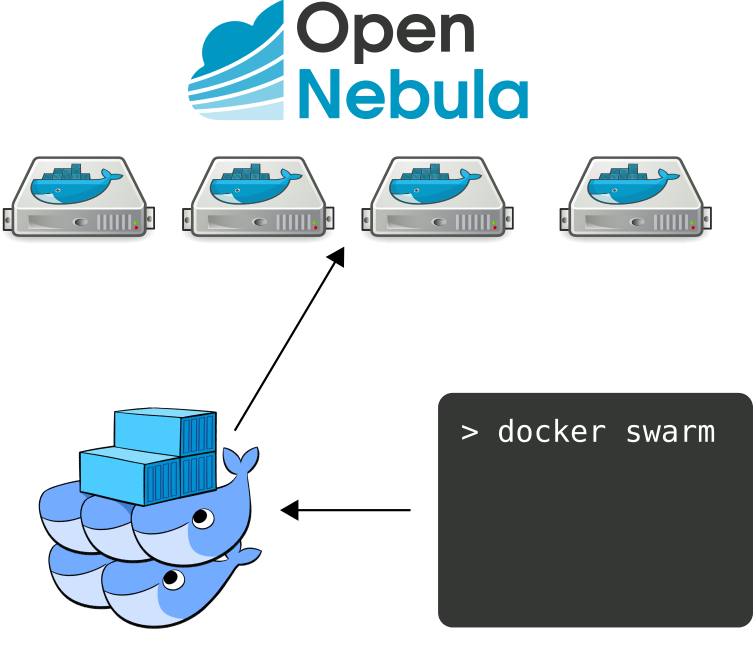

Docker Swarm is native clustering for Docker. With Docker Swarm you can aggregate a group of Docker Engines (running as Virtual Machines in OpenNebula in our case) into a single Virtual Docker Host. Docker Swarm delivers many advantages, like scheduling, high availability, etc.

We continue with our approach of using Docker Machine with the OpenNebula Driver plugin, in order to deploy Docker Engines easily and seamlessly. Please make sure to follow the previous post, in order to have a fully functional Docker Machine working with OpenNebula.

As displayed in the following image, Docker Swarm will make a cluster out of a collection of Docker Engine VMs deployed in OpenNebula with Docker Machine:

Docker Swarm makes use of a Discovery Service in order to implement the cluster communication and discovery. The Docker project provides a hosted discovery service, which is appropriate for testing use cases, however, in our case, we will use Docker Machine to deploy an instance of Consul, in particular this Docker container for Consul.

NOTE: This guide is specific for KVM, if you would like to try this plugin out with the vCenter hypervisor, or with vOneCloud, there are a few small differences, so make sure you read this: Docker Machine OpenNebula plugin with vCenter. In particular you will need to use the option –opennebula-template-* instead of –opennebula-image-*.

The first step is to deploy it using Docker Machine:

$ docker-machine create -d opennebula --opennebula-network-name private --opennebula-image-name boot2docker --opennebula-b2d-size 10240 consul

$ docker $(docker-machine config consul) run -d -p "8500:8500" -h "consul" progrium/consul -server -bootstrap

$ CONSUL_IP=$(docker-machine ip consul)

Once it’s deployed, we can deploy the Swarm master:

$ docker-machine create -d opennebula --opennebula-network-name private --opennebula-image-name boot2docker --opennebula-b2d-size 10240 --swarm --swarm-master --swarm-discovery="consul://$CONSUL_IP:8500" --engine-opt cluster-store=consul://$CONSUL_IP:8500 --engine-opt cluster-advertise="eth0:2376" swarm-master

And now deploy swarm nodes:

$ docker-machine create -d opennebula --opennebula-network-name private --opennebula-image-name boot2docker --opennebula-b2d-size 10240 --swarm --swarm-discovery="consul://$CONSUL_IP:8500"--engine-opt cluster-store=consul://$CONSUL_IP:8500 --engine-opt cluster-advertise="eth0:2376" swarm-node-01

You can repeat this for as many nodes as you want.

Finally, we can connect to the swarm like this:

$ eval $(docker-machine env --swarm swarm-master)

$ docker info

Containers: 3

Running: 3

Paused: 0

Stopped: 0

Images: 2

Server Version: swarm/1.1.2

Role: primary

Strategy: spread

Filters: health, port, dependency, affinity, constraint

Nodes: 2

swarm-master: 10.3.4.29:2376

└ Status: Healthy

└ Containers: 2

└ Reserved CPUs: 0 / 1

└ Reserved Memory: 0 B / 1.021 GiB

└ Labels: executiondriver=native-0.2, kernelversion=4.1.18-boot2docker, operatingsystem=Boot2Docker 1.10.2 (TCL 6.4.1); master : 611be10 - Tue Feb 23 00:06:40 UTC 2016, provider=opennebula, storagedriver=aufs

└ Error: (none)

└ UpdatedAt: 2016-02-29T16:08:41Z

swarm-node-01: 10.3.4.30:2376

└ Status: Healthy

└ Containers: 1

└ Reserved CPUs: 0 / 1

└ Reserved Memory: 0 B / 1.021 GiB

└ Labels: executiondriver=native-0.2, kernelversion=4.1.18-boot2docker, operatingsystem=Boot2Docker 1.10.2 (TCL 6.4.1); master : 611be10 - Tue Feb 23 00:06:40 UTC 2016, provider=opennebula, storagedriver=aufs

└ Error: (none)

└ UpdatedAt: 2016-02-29T16:08:12Z

Plugins:

Volume:

Network:

Kernel Version: 4.1.18-boot2docker

Operating System: linux

Architecture: amd64

CPUs: 2

Total Memory: 2.043 GiB

Name: swarm-master

The options cluster-store and cluster-advertise are necessary to create multi-host networks with overlay driver within a swarm cluster.

Once the swarm cluster is running, we can create a network with the overlay driver

$ docker network create --driver overlay --subnet=10.0.1.0/24 overlay_net

and then we check if the network is running

$ docker network ls

In order to test the network, we can run an nginx server on the swarm-master

$ docker run -itd --name=web --net=overlay_net --env="constraint:node==swarm-master" nginx

and get the contents of the nginx server’s home page from a container deployed on another cluster node

docker run -it --rm --net=overlay_net --env="constraint:node==swarm-node-01" busybox wget -O- http://web

As you can see, thanks to the Docker Machine OpenNebula Driver plugin, you can deploy a real, production ready swarm in a matter of minutes.

In the next post, we will show you how to use OneFlow to provide your Docker Swarm with automatic elasticity. Stay tuned!

Great work if Opennebula will be used as your container cluster manager.

The problem I see are conflicts with (way more advanced) solutions like:

http://rancher.com/rancher

Beside Docker Swarm you can use it with Kubernetes or their own cattle

https://github.com/rancher/cattle as a orchestration engine.

Fore sure Rancher will dominate in Future, but it will never integrate the

missing “bare metal pieces”. So it makes sense to use it with MAAS or

of course Opennebula.

There should be a simple solution to integrate with Rancher and not build

existing functionality again and again. – Some Videos:

https://www.youtube.com/channel/UCh5Xtp82q8wjijP8npkVTBA/videos

On-demand activation of Docker containers with systemd is possible

with Opennebula Jeff Lindsay`s Consul container. – See Jeff here:

https://www.youtube.com/watch?v=aBgObyaNkdc

Imagine to run a thousand WP or Drupal on a standard Hardware still

fast when just a fraction are used at a time.

https://developer.atlassian.com/blog/2015/03/docker-systemd-socket-activation

This would be a unique feature offered by Opennebula!

Oh sorry, I should write what`s about in the last comment:

It is all about “socket activation”.

http://0pointer.de/blog/projects/socket-activation.html