We’re entering a new phase in the evolution of AI infrastructure — one defined by the need for high performance, scalability, and digital sovereignty. As organizations accelerate their AI initiatives, they’re realizing that traditional cloud-native stacks alone can’t deliver the control and efficiency these workloads demand.

That’s why we’ve developed the OpenNebula AI Factory Reference Architecture, a practical framework for building AI Factories — high-performance, secure, and sovereign infrastructures for AI training and inference.

This white paper brings together insights from large-scale deployments and outlines how OpenNebula unifies high-performance computing, virtualization, and cloud orchestration within a single platform, enabling organizations to run GPU-accelerated AI workloads with bare-metal efficiency and full operational sovereignty.

With the growing reliance on GPU-intensive workloads and increasing regulatory attention to data sovereignty, organizations are rethinking how they build and manage their AI infrastructure. Around the world, cloud providers, HPC centers, telecommunications operators, and public institutions are investing in AI Factories — large-scale environments designed to train, deploy, and operate AI models efficiently and securely.

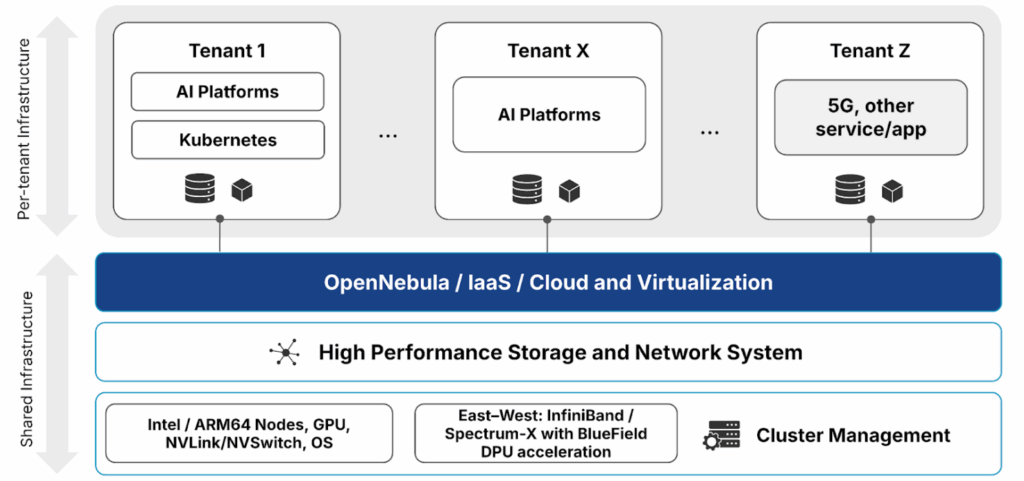

At the core of this architecture lies OpenNebula’s Infrastructure-as-a-Service (IaaS) layer, which provides unified orchestration, virtualization, and automation across distributed clusters. This design establishes a clear separation between shared infrastructure management and per-tenant operations, enabling organizations to deliver AI-as-a-Service models with full resource isolation, scalability, and control.

Beyond Kubernetes: Deep Integration and True Multi-Tenancy

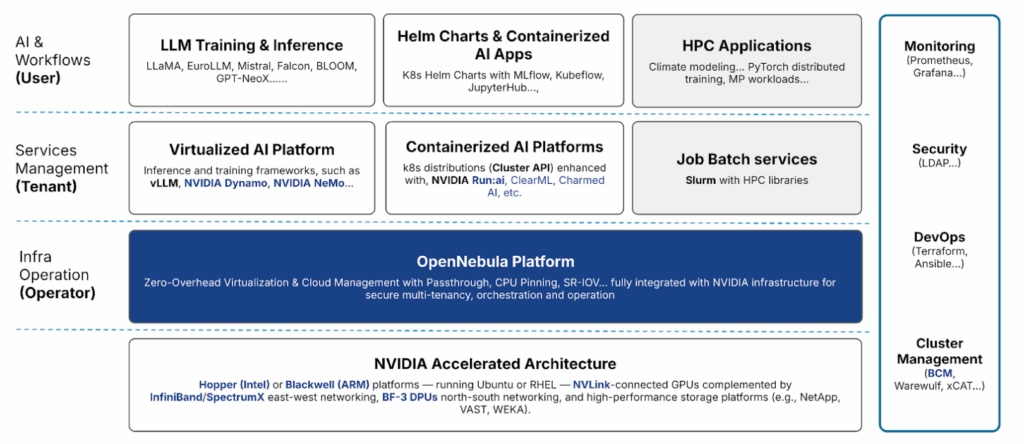

While Kubernetes has become the standard for container orchestration, it’s not enough on its own to deliver true multi-tenancy or deep hardware integration. The white paper explains how OpenNebula complements Kubernetes by managing the underlying infrastructure — including GPU servers, storage, and networking — with direct integration of NVIDIA’s accelerated computing stack.

OpenNebula natively supports the latest Hopper and Blackwell GPUs, NVLink, BlueField DPUs, InfiniBand, and Spectrum-X networking, ensuring that AI workloads can run with the performance and efficiency required for both training and inference.

This approach combines HPC-style performance with cloud-native flexibility, allowing organizations to balance efficiency, scalability, and sovereignty under a single orchestration layer.

Building the Foundations for Sovereign AI Infrastructure

The AI Factory Reference Architecture also outlines the key building blocks of a modern AI ecosystem — from GPU-accelerated servers and high-performance storage systems to AI platforms, DevOps tools, and monitoring frameworks.

Each tenant operates within an isolated Virtual Data Center (VDC), managing its own AI platforms and frameworks — from Kubernetes-based environments such as Run:ai, Kubeflow, or NVIDIA Dynamo, to bare-metal GPU execution for performance-critical workloads.

By leveraging advanced technologies such as PCI passthrough, SR-IOV, and CPU pinning, OpenNebula achieves near-zero virtualization overhead, ensuring that AI and HPC workloads run with bare-metal efficiency while maintaining the agility and isolation required by modern multi-tenant infrastructures.

The OpenNebula AI Factory Reference Architecture provides a proven foundation for building sovereign, scalable, and high-performance AI infrastructures — whether for internal use or as the basis for AI-as-a-Service or GPU-as-a-Service offerings.This white paper reflects our commitment to helping enterprises and public institutions design, deploy, and operate AI infrastructure that combines cloud agility, HPC performance, and full sovereign control.

0 Comments