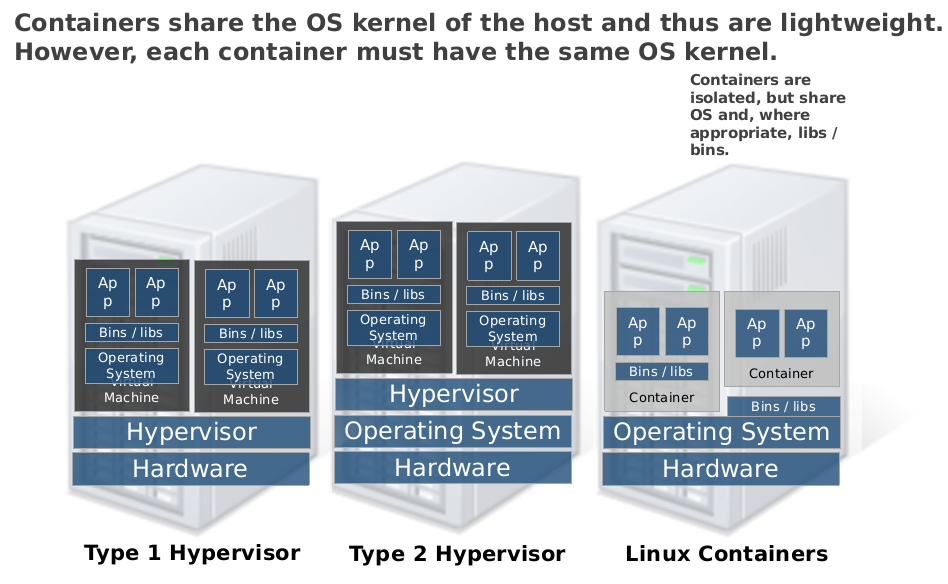

Operating-system-level virtualization, a new technology that has recently emerged and is being accepted into cloud infrastructures, has the advantage of providing better performance and scalability than other virtualization technologies such as Hardware-Assisted Virtual Machine (HVM).

However, on private cloud scenarios OS virtualization technology hasn’t had quite the acceptance it should. Private cloud managers, like OpenStack and Eucalyptus, don’t offer the support needed for this type of technology. OpenNebula, is a flexible cloud manager, which has gained very good reputation over the last few years. Therefore, to strengthen the OS virtualization support in this cloud manager could be a key strategic decision.

This is why LXCoNe was created. It is a virtualization and monitoring driver for OpenNebula that comes as an add-on to provide OpenNebula with the ability to deploy LXC containers. It contributes to achieve better interoperability, performance and scalability in OpenNebula clouds. Right now, the driver is stable and ready for its release. It is currently being used in the data center from Instituto Superior Politécnico José Antonio Echeverría in Cuba, with great results. The team is still working on adding some more features, which are shown next, to improve the driver.

Features and Limitations

The driver developed has several features such as:

- Deployment of containers on File-Systems, Logical Volume Managers (LVM) and CEPH.

- Attachment and detachment of network interface cards and disks, both before creating the container or while it’s on.

- Monitoring containers and node’s resources usage.

- Powering off, suspending, stoping, un-deploying and rebooting running containers.

- Supports VNC.

- Supports snapshots when using File-Systems.

- Limits container’s RAM usage.

It lacks the following features, on which we are currently working:

- Container’s CPU usage limitation.

- Containers live migration.

Virtualization Solutions

OpenNebula was designed to be completely independent from underlying technologies. When the project started the only supported hypervisors were Xen and KVM, it was not thought out to support OS-level virtualization. This probably influenced the way OpenNebula managed physical and virtual resources. Because of this and due to the difference between the two types of virtualization technologies there are a few things to keep in mind when using the driver. These are:

Disks

When you successfully attach a hard drive, this will appear inside the container, in /media/<Disk_ID>. For detaching the hard drive it must be placed inside the container in the same path previously explained. It cannot be in use, otherwise it will purposely fail.

Network interfaces (NIC)

If you hot-attach a NIC, it will appear inside the container, but without any configurations. It will be up and ready to be used, contrary to what happens when you specify NICs in the template and then create the virtual machine. In this case, the NIC will appear set up and ready to be used, unless you specifically want it to appear otherwise.

Installation

Want to try? The drivers are part of the OpenNebula Add-on Catalog. Installation process is fully and simply explained in this guide.

Contributions, feedback and issues are very much welcome by interacting with us in the GitHub repository or writing a mail:

José Manuel de la Fé Herrero: jmdelafe92@gmail.com

Sergio Vega Gutiérrez: sergiojvg92@gmail.com

0 Comments