The focus of our research group at Sapienza University of Rome is cybersecurity. One of our current tasks involves developing models and algorithms for threat modeling and network hardening in computer networks. In practice, we generate huge “attack graphs” representing all possible ways an attacker could move laterally in a network by compromising networked systems. In this post I will provide an introduction to our work and to the role OpenNebula plays in our approach to cybersecurity research.

One of the analysis we perform in computer networks involves tracking the impact the cyber-attacks have on the organization’s mission. We do this by linking attack paths on networked assets with the dependencies of such assets within the organization’s business processes. An application of such analyses is to propose optimal risk-aware mitigation actions to protect corporate networks.

One of the big problems we faced when designing this kind of analysis was to evaluate our algorithms on real (or, at least, realistic!) scenarios. Very few datasets of actual networks exist, and the relative networks are typically very small in scale, so providing unfortunately very limited information. Furthermore, the final aim of systems of this kind is handling actual live (and dynamic) environments that can be scanned to get real time information from the various machines, updating the attack graph accordingly.

A solution based on OpenNebula

To accomplish these tasks, we designed an infrastructure built on top of OpenNebula. This solution allowed us to automatically deploy a target networked environment (what we call a testbed) on a dedicated cluster via infrastructure-as-a-code abstractions. This environment supports data collection as well as various cyber experimentation tasks.

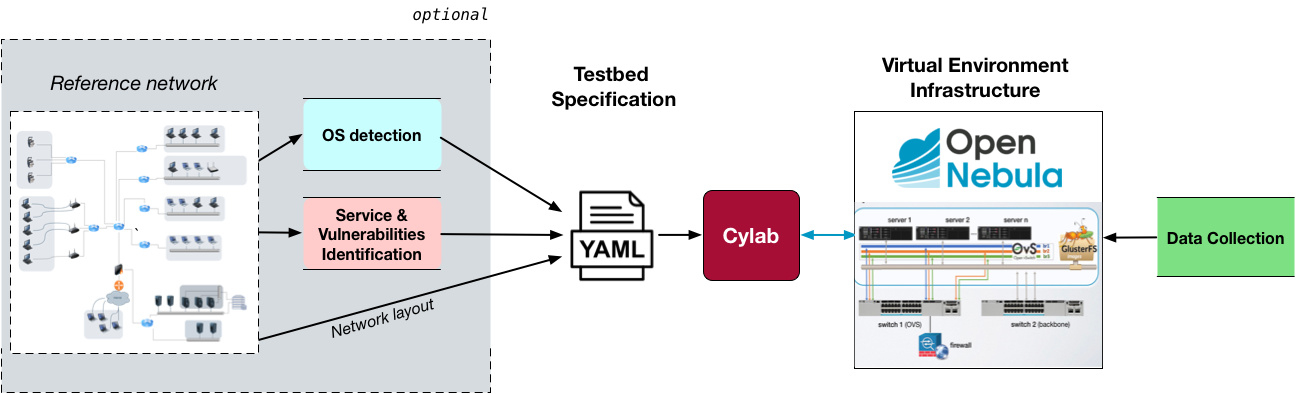

Let’s explore the capabilities of our system! Our testbed could be, for instance, a virtual version of an existing network that we want to reproduce. To do so, we could perform tasks such as OS and service detection, as well as identification of existing machines on the reference network, together with running applications and their vulnerabilities.

We can merge this information with the network layout to build a testbed specification. In our case, we produced a YAML file describing the topology and characteristics of our testbed. The detection/discovery step is, obviously, optional; one can always write a YAML file from scratch, describing a custom testbed—that’s why we won’t be looking into the specifics of the detection steps here.

To control the infrastructure we developed Cylab, an application that takes this specifications and implements them on an OpenNebula-based cluster. This infrastructure is designed in a way that allows us to collect relevant information, like for example machine metadata and network traffic.

What I’d like to do at this point is share with you some of the technical and design choices we took when implementing this project, primarily those concerning the virtual environment infrastructure we decided to use, as well as the ones on the storage components and the network layer.

Virtual environment infrastructure

Part of our project involved comparing the two main open source Virtual Infrastructure Managers (VIM) by market penetration: OpenStack and OpenNebula. We approach this comparative analysis taking into consideration a varied criteria, including internal organization, storage, networking, hypervisors and governance [1].

Among the factors behind our choice of OpenNebula over OpenStack there were a number of crucial aspects:

- OpenStack is made of a ton of submodules, each of them being a subproject on its own, such as Heat (orchestrator), Swift (object storage support), Neutron (networking), Keystone (user management and authentication), etc… Each of them has a different maturity level, integration model and API.

- OpenNebula, on the contrary, has a sort of monolithic core and a single endpoint, which is managed through a set of coherent APIs and a single user-friendly GUI (Sunstone).

- OpenStack is controlled by a Foundation whose priorities are driven by vendors that also sell their enterprise-grade proprietary implementations of its subcomponents (in the form of ‘support’ extensions).

- OpenNebula only releases a single, free, user-driven version of their product, and the whole project is managed by a single vendor backed by a community of developers.

After that initial choice, we can confirm now that OpenNebula is a simple product to set up and use, but at the same time it is robust and enterprise-ready.

Storage layer

Once we took the decision of using OpenNebula as our Virtual Infrastructure Manager, we moved on to the next challenge: how to perform one of the fundamental tasks of a virtualization platform, that is, to be able to access a VM images repository.

Typically, in OpenNebula, new VM templates—or appliances—are added to a single node either manually or by using its command-line interface (CLI) or the Sunstone GUI to download them from the project’s public Marketplace. Of course, a private Marketplace can also be set up to store corporate VM templates.

Appliances from a local Marketplace have to be accessible at any time in order to be instantiated on demand on any of the nodes that form our virtualized infrastructure. However, using a centralized repository would result in a high network load for every new VM spawn.

In our case we decided to use GlusterFS, the well-known open source distributed filesystem, to aggregate storage mount points (“bricks”) from a pool of trusted servers and set up in this way the storage infrastructure we needed for our Images Datastore.

In particular we chose GlusterFS’s replicated mode, in which exact copies of the data are maintained on all bricks. This fosters data locality at VM instantiation time, something similar to what can be achieved with alternative open source storage solutions such as Lustre and Ceph.

Networking layer

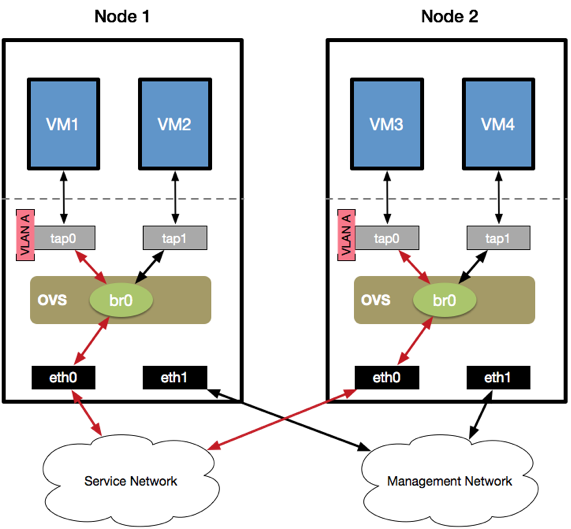

For the networking layer—in particular for communication across physical nodes—we chose Open vSwitch (OVS), a software implementation of a multilayer network switch. Every virtual interface belongs to a virtual network, which in turn sits on a specific bridge handled by OVS.

OVS maintains a MAC address database to keep track of the VM addresses in the various LANs, and processes incoming input frames to understand when to switch a packet to a local virtual interface or when to forward it to a physical interface for that packet to be delivered to other physical nodes. In the following figure, for instance, bridge br0 is aware of the tap0-eth0 virtual to physical mapping:

VLANs can be configured directly from OpenNebula, to sit on a specific bridge, and multiple bridges can also be used to separate VLANs logically. Furthermore, OVS comes also with a SPAN/RSPAN functionality that enables efficient data collection at the bridge level, which for us was quite handy.

Data collection

An important aspect of what we want to be able to do as part of our cybersecurity analysis is collecting traffic from a specific testbed we have deployed on our virtualized environment.

The VMs of a testbed are usually deployed on different physical hosts, and their virtual network interfaces sit on OVS bridges, with different physical nodes connected to each other through a switch. Of course, a particular VM can have multiple interfaces.

If we want to collect all network traffic in the testbed without missing the traffic between VMs deployed on the same host, that’s when OVS comes to rescue, as it can be configured to use Switched Port Analyzer (SPAN). SPAN allows to mirror the traffic from each of the network interfaces of interest, towards a specific designated output port (the SPAN port).

Typically, to set up a cybersecurity-oriented emulation environment like ours, the required physical infrastructure would consist of a number of physical servers, with one of them acting as the OpenNebula Front-end—this is the server running the OpenNebula daemon and the Sunstone GUI. OpenNebula comes with HA support, but in our case this was not required.

The rest of nodes in the environment just need to go through a simple configuration process, depending on the specific virtualization technology you are planning to use (KVM, LXD, etc.). If OpenNebula’s Image Datastore is managed via GlusterFS—like in our case—the physical servers should be connected through a switch to a dedicated storage network.

Deploying testbeds automatically with Cylab

Now that we’ve had a look at the bits and bytes, let’s talk about Cylab! CyLab—or “Cyber range laboratory”—is a software application we developed one year and a half ago (there was no official Terraform Provider for OpenNebula back then) to be able to use a YAML description file to carry out the automated deployment of a virtual testbed on our OpenNebula-based environment.

Our YAML description files tend to contain specific portions devoted to configuration of VLANs, virtual routers and firewalls. They also include information regarding the operating system (i.e. which specific VM template to instantiate), VM users, and the services that have to be installed on the machines, along with custom init/configuration scripts.

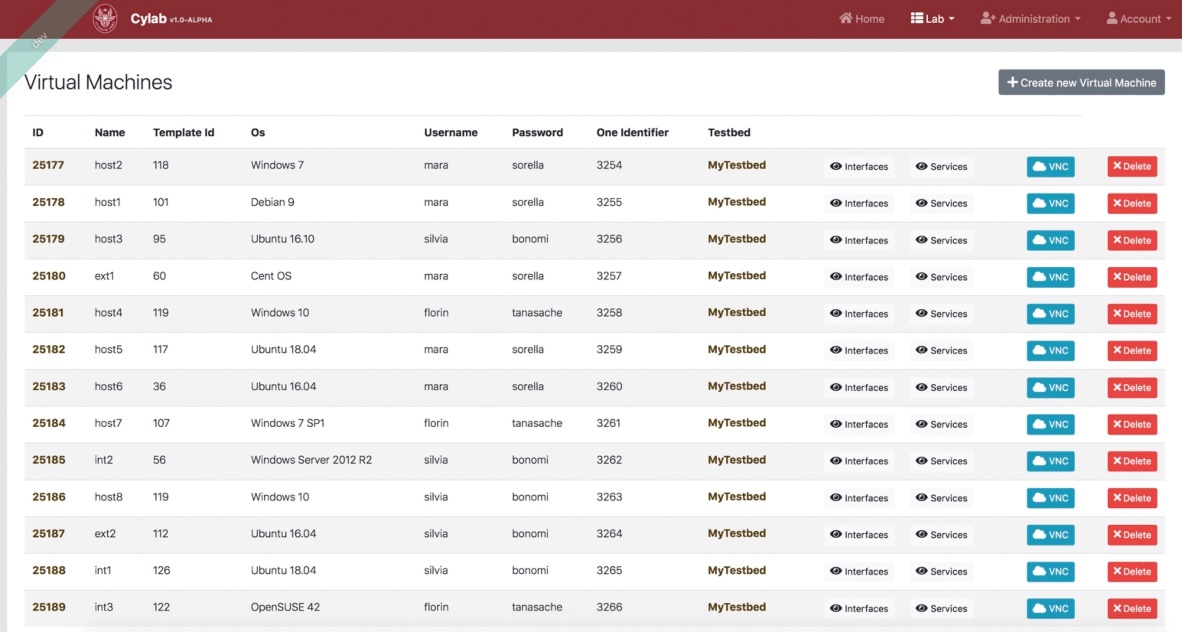

After using this method for the deployment of a testbed, this is how out list of virtual machines looks like:

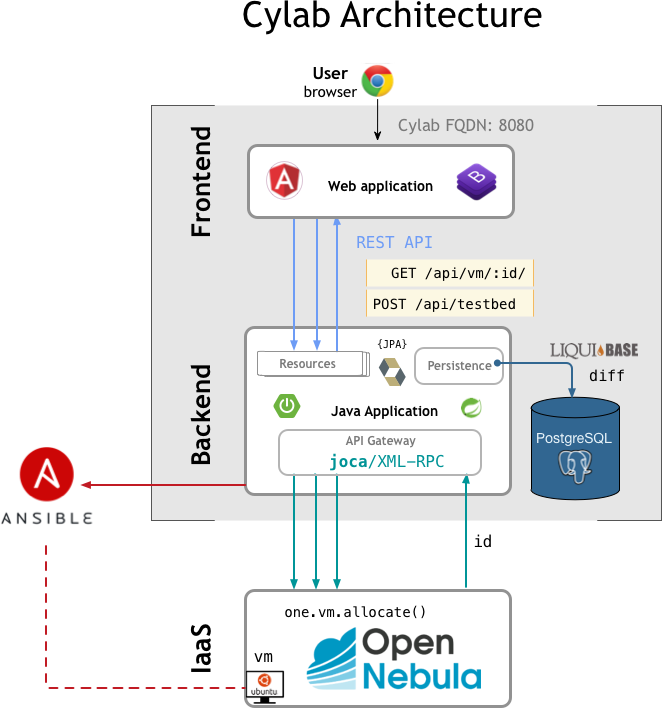

CyLab‘s front-end is an Angular/Bootstrap web application that interacts with browser clients for testbed creation and manipulation. It talks to the back-end via REST API. CyLab‘s back-end is a Java Spring Boot application with DB (PostgreSQL) persistence.

The back-end communicates with OpenNebula using its native XML-RPC API, which is one of the core component of the project’s powerful modular approach. The back-end also integrates with an Ansible server for service installation on deployed VMs.

The applications are endless…

Such a versatile and robust environment provides some clear advantages, but the applications of an OpenNebula-based IaaS model like the one we’ve just described are really numerous, and not just for cybersecurity analysis. In our case, these were some of those immediate applications:

- Cyber-range deployment for security training and testing: allows us to deploy custom scenarios to perform security training activities such as incident management training (detection, investigation, response) or general training for raising employees’ cybersecurity awareness.

- Dataset generation: collecting data from testbeds (e.g., network traffic) and, more generally, conducting any analysis that is not related to performance (e.g. user behavior profiling).

As an example of the latter, for our paper on this subject [1] we generated a network traffic dataset of benign and malicious network traffic collected from a testbed made of 52 hosts deployed in our emulation environment. For that purpose, we deployed a number of software agents written in python on some of the VMs.

Those agents carry out a number of benign traffic simulation jobs (HTTP/HTTPS web browsing, SSH, Samba and SFTP) managed by a scheduler. The jobs capture different behavioral patterns. At the same time, we performed various cyber attacks following a diverse set of attack scenarios (e.g. Heartbleed, a RCE Attack on Drupal CMS, and a Ransomware Deployment) and collected all generated malicious traffic.

The final dataset has been processed to obtain feature-rich labeled attack flows and benign traffic, all of which we have released publicly. Such extensive and comprehensive datasets are quite useful for cybersecurity analyses, such as training IDS and IPS classifiers and other machine-learning tasks, as well as for deep packet inspection investigation and other related activities.

Hope you have enjoyed this article, and we hope our experience with OpenNebula and other open source technologies will be useful to colleagues working in the cybersecurity sector. For questions and clarifications, please leave a comment on this site and we’ll be happy to answer!

PS – Our solution to create an emulation environment based on OpenNebula for cybersecurity analysis was presented a few months ago in Barcelona (Spain) at the OpenNebulaConf 2019, and also in Bangalore (India) at the International Conference on Distributed Computing and Networking 2019. An article about our work has appeared previously on The Register.

References:

[1] Building an Emulation Environment for Cyber Security Analyses of Complex Networked Systems. Florin Dragos Tanasache, Mara Sorella, Silvia Bonomi, Raniero Rapone and Davide Meacci (2019). IEEE International Conference on Distributed Computing and Networking 2019. arXiv version: https://arxiv.org/abs/1810.09752

0 Comments