The LXC driver has recently got support for CPU cores limitation, among other resource limitation features. Unlike vanilla LXD, LXC requires the user to specify exactly which cores the container should have access to.

That’s why we have developed the CPU Pinning functionality for the LXC driver so now, thanks to OpenNebula’s advanced CPU Topology feature, the OpenNebula scheduler can take care of these CPU core assignments 🎯

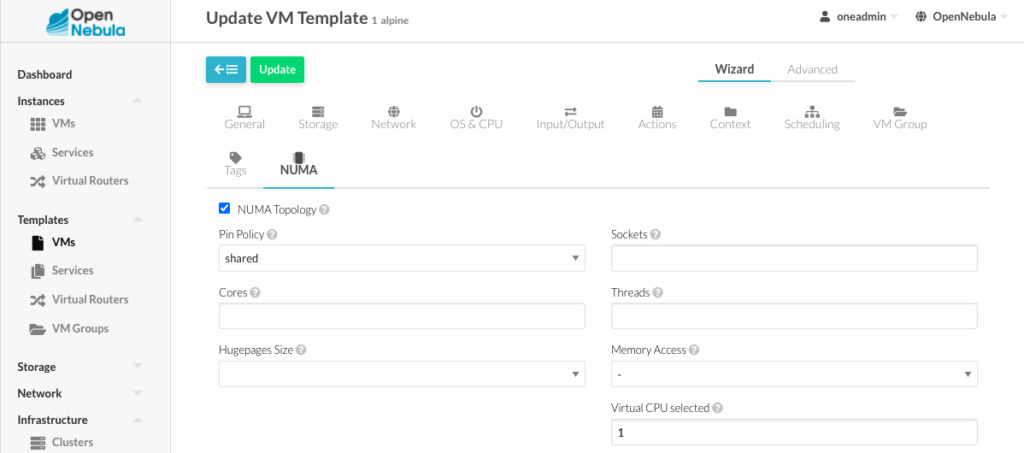

In order to use the automatic core assignment, you need to specify a PIN_POLICY on the VM Template. In order to do this, edit the VM Template and enable NUMA Topology in the NUMA tab. Then use any of the policies.

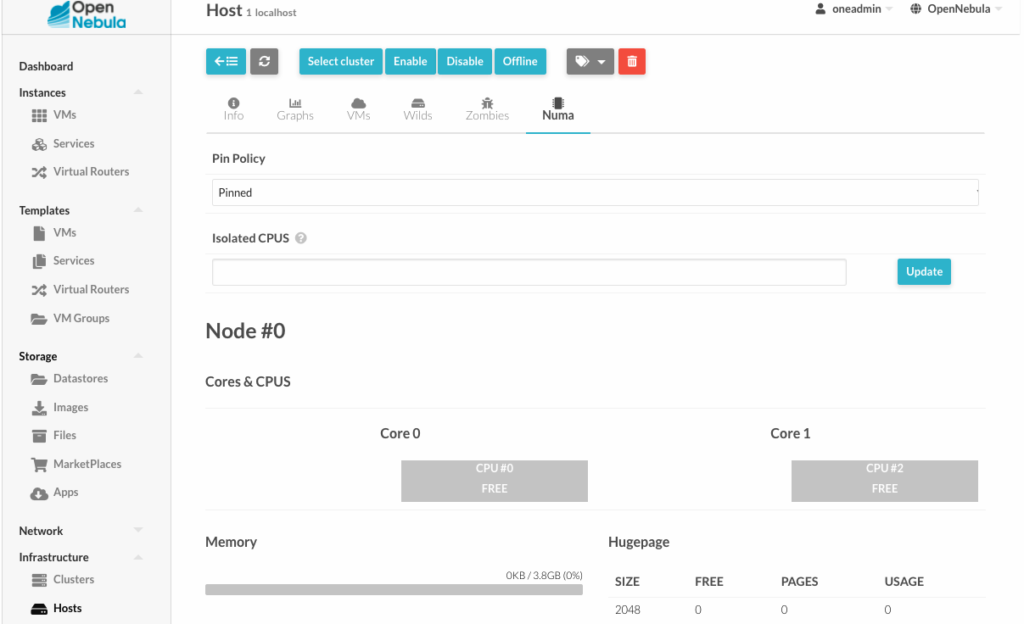

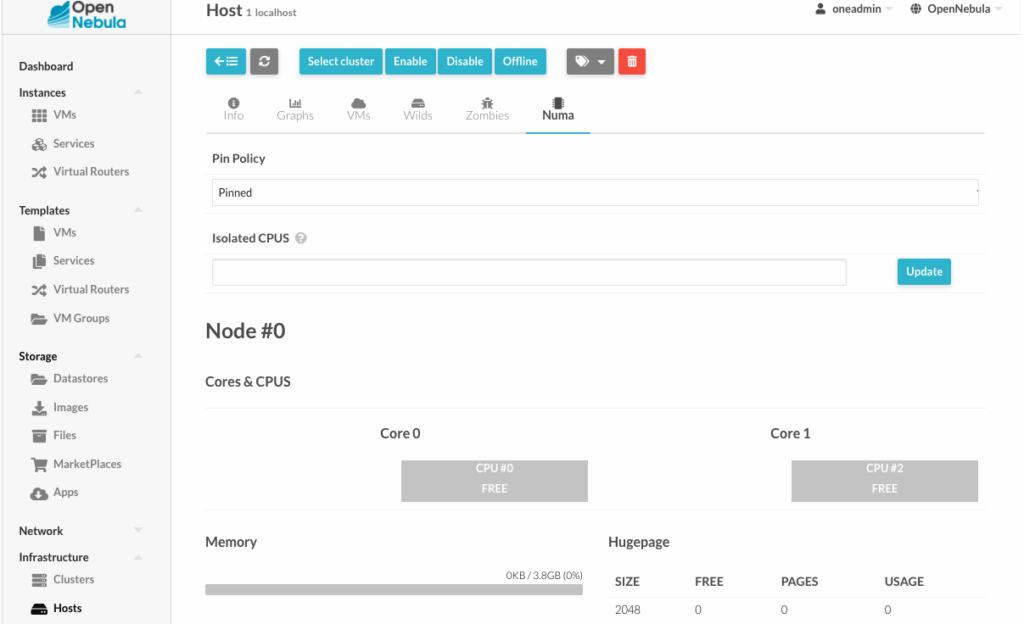

Now on the host, enable the PINNED pin policy:

The scheduler will automatically place the VMs on the cores of the host based on the selected policy and their availability.

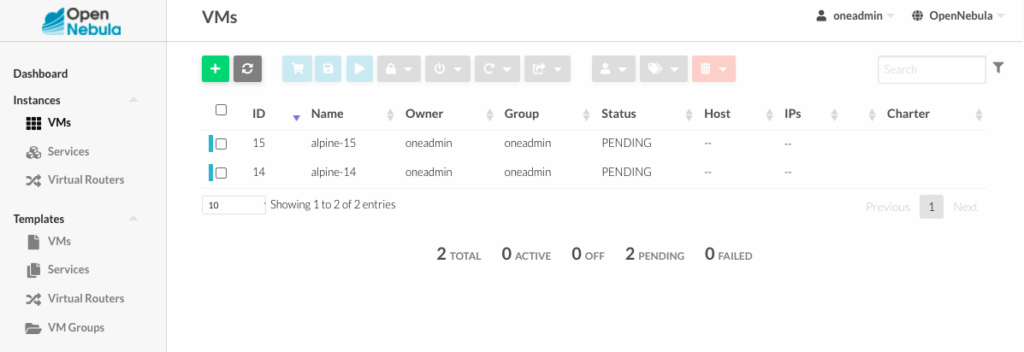

Now let’s deploy some VMs! 🤓

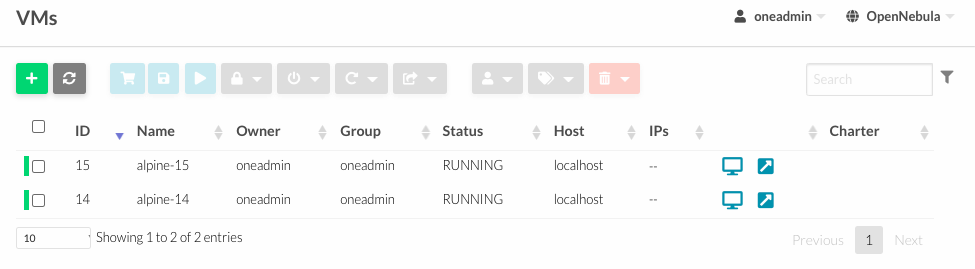

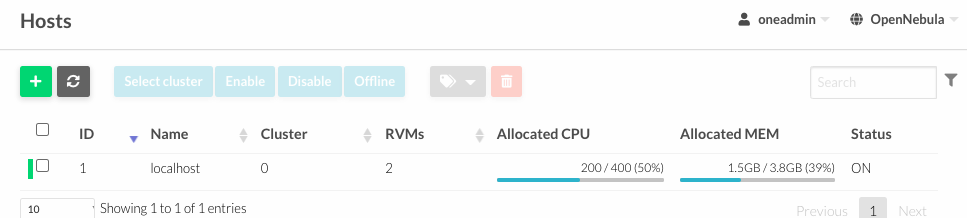

Once they are deployed, we can check the host resource allocation:

Here we can see which cores were assigned to the VMs running on the host. And checking the VM Template, we can see the configuration generated by the scheduler:

NUMA_NODE = [

CPUS = “2”,

MEMORY = “786432”,

MEMORY_NODE_ID = “0”,

NODE_ID = “0”,

TOTAL_CPUS = “1” ]

NUMA_NODE = [

CPUS = “0”,

MEMORY = “786432”,

MEMORY_NODE_ID = “0”,

NODE_ID = “0”,

TOTAL_CPUS = “1” ]

This configuration is then translated to LXC cgroups limitation in the LXC container configuration file:

root@cgroupsv2-d11-topology:~# cat /var/lib/lxc/one-15/config | grep cgroup

lxc.cgroup2.cpu.weight = ‘1024’

lxc.cgroup2.cpuset.cpus = ‘2’

lxc.cgroup2.cpuset.mems = ‘0’

lxc.cgroup2.memory.max = ‘768M’

lxc.cgroup2.memory.low = ‘692’

lxc.cgroup2.memory.oom.group = ‘1’

root@cgroupsv2-d11-topology:~# cat /var/lib/lxc/one-14/config | grep cgroup

lxc.cgroup2.cpu.weight = ‘1024’

lxc.cgroup2.cpuset.cpus = ‘0’

lxc.cgroup2.cpuset.mems = ‘0’

lxc.cgroup2.memory.max = ‘768M’

lxc.cgroup2.memory.low = ‘692’

lxc.cgroup2.memory.oom.group = ‘1’

We encourage you to test this new feature using hosts with more complex topologies (for example, a server with several NUMA nodes). The cpuset.mems cgroup will limit the container appropriately.

Hope you find this new feature useful and, as always, don’t hesitate to send us your feedback! 📡

ℹ️ Visit the OpenNebula Knowledge Base for more resources, including detailed answers to common questions and issues, and best practices to deploy and operate an OpenNebula cloud. NB: don’t forget to sign-in with your Customer ID to unlock the full contents!

0 Comments