🖊️ This blog post has been co-authored by Andrés Pozo (DevOps Engineer & Software Developer), David Artuñedo (CEO at OnLife Networks), and Jesús Macías (DevSecOps Advocate & Innovation Engineer).

Cloud computing has become the go-to standard for managing IT resources in companies of all sizes. The big advantages it provides for computational resource management are unbeatable in terms of flexibility and scalability—but it has some shortcomings too. One of the main drawbacks comes from the fact that cloud computing significantly increases the level of complexity of the resulting system.

Many cloud management solutions try to address this complexity problem by performing choices for the user as part of standardized, ‘vanilla’ deployments. Configurations tend to be set to values that are optimized for a majority of users. In this way, the people using those solutions are not required to understand all the technical details in order to make use of them.

But this model comes with some limitations: what happens when this one-size-fits-most technology doesn’t cover the particular problem you are trying to solve? We at Telefónica Innovation team have been working on innovative solutions around Edge Computing. The challenge was to model networking equipment we have at Central Offices to this software-and-cloud-eats-all era. In this post we’ll explain our experience in one of those scenarios and how we have managed to address the traffic throughput challenges of Network Functions Virtualization (NFV) by using OpenNebula’s flexibility and extensibility 🚀

Houston, we have a problem

A common issue for Telecom and networking vendors, in general, is how to optimize the performance you get from your virtualized network functions (VNF) when your workload is mostly managing traffic packets (for example in virtual routers, firewalls, etc.). In one of our work lines, we are exploring the possibility of removing our customers’ physical routers at home and running them as virtualized routers in our Central Offices instead. This concept is known as Virtual CPE (vCPE). This move would also allow us to provide new services through those virtualized routers based on the many potentialities of Edge Computing. We’re interested—who’s not?—in providing the greatest possible performance out of our hardware infrastructure.

This scenario implies some clear constraints:

- The solution has to be horizontally scalable (we’d like to have as many virtual routers in a host as possible).

- The solution has to manage the maximum possible amount of traffic for this transformation to make sense from a return-on-investment perspective.

- The solution has to be secure and each user’s traffic has to run separately from the traffic that other customers generate (we actually address this with a QinQ VLAN configuration).

We at Telefónica have deployed an Edge datacenter full of x86_64 virtualization servers—each of them with 198GB of RAM, 88 CPU threads, and high-speed 40Gb NICs—in one of our Central Offices. What we really want to do now is to use this powerful infrastructure to deploy the largest possible amount of KVM-based virtual machines (small routers based on OpenWRT) and provide our customers with the basic network services they need (DNS, DHCP, QoS, etc.) plus, of course, high-speed connectivity to the internet and other on-line services.

The main issue that comes up when trying to accomplish that objective from the classical approach of ‘interruption-driven’ packet management in Linux hosts is that, with high levels of traffic like ours, you come across a bottleneck pretty soon (i.e. the packets processed per second or throughput that we can achieve is too low). This is due to the excessive amount of interruptions in the Linux kernel. Some offloading features in physical NICs may help to mitigate this problem, but they’re not enough to take full advantage of the high-speed NICs we’ve got nowadays.

There are many companies working on different approaches to address this problem and we decided to test one of them in our lab. We selected the Data Plane Development Kit (DPDK) because of the expected improvement in performance that had been reported and the good level of the project’s documentation. We assumed it would not be very hard to implement this test thanks to OpenNebula’s extensibility functions—and we were quite right! 😊

DPDK, OVS, and OpenNebula

When we carried out our initial tests based on interruptions we immediately observed that we were very quickly reaching the maximum amount of interruptions and context switches that our hosts could handle. Having a large amount of VMs processing those packets (generating kvm_entry and kvm_exit events in the system) didn’t help either… When we tuned some of the offload settings in the NICs, the performance improved a little but we soon realized that in this way we weren’t actually using our hardware efficiently.

DPDK is a technology that, instead of managing the packets at kernel level with interruptions, handles the packages in user space with shared memory access. The packets are processed by some CPU cores dedicated exclusively to this task (using Poll Mode Driver threads instead of interruptions). The rest of the CPU power can then be used to process the VMs’ regular workload.

For the networking inside the virtualization hosts we used the awesome OpenVSwitch (OVS), which already supports DPDK natively. The available documentation for both DPDK and OpenVSwitch is really good, and some handy introductions to DPDK and OVS-DPDK can also be found on Intel’s and OVS’s sites.

On top of that, the hosts we were using for these tests had NUMA architecture. For DPDK it is important to be able to pin precisely the virtual machines to specific cores in the host, as it’s expensive—in terms of time and performance—to communicate resources (i.e. processes and memory) between the two NUMA nodes in the host.

After a thorough analysis, we figured out exactly what we needed OpenNebula to provide in order to be able to support our ‘experiment’ with OVS-DPDK:

- OpenNebula had to be able to select the exact resources from the virtualization host where theVMs would be placed. OpenNebula should be aware of the host’s NUMA architecture and provide means to define how and where the resources of the VMs will be deployed.

- For the VMs to make use of DPDK-based interfaces, the guest and the host should both have shared access to common memory, and also when using hugepages.

- Given that the interfaces we were using for these tests were vhost-user, the operations that have to be made in the OVS to define and attach the virtual interface into OVS are different. The new bridge type openvswitch_dpdk has been added to the ovswitch driver in OpenNebula to support this functionality.

- As we were handling the traffic with QinQ VLAN tagging, we needed to extend the driver functionality with some logic that would install or remove (depending on the case) the specific OVS rules that manage the VLAN tagging to properly implement our design. This was achieved by using the extensibility feature in OpenNebula’s network drivers.

We presented these requirements to the OpenNebula Team and in a few days the changes were implemented and we were already testing them! 😀 These improvements have been out there and available for the Community since OpenNebula 5.10 “Boomerang”.

Tests and some results

The traffic scenario we used to model our users’ load was based on downloading a single 1Mb file from an HTTP server that was connected to our server through 40Gb interfaces and switches. Needless to say, it’s not a complex model of user traffic, but nowadays most of the traffic in the network is video streams served via HTTP, so it was a good enough approximation to a typical situation—at least in the first phase.

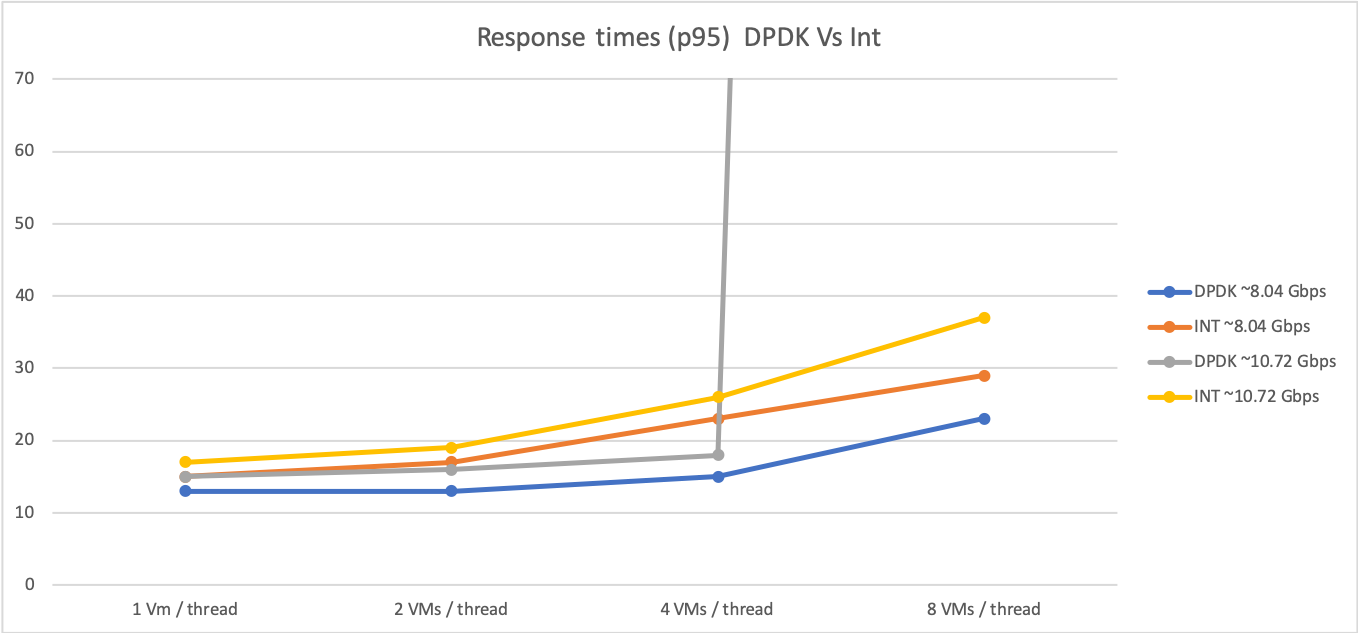

One of the main things we wanted to obtain with these tests was a model showing exactly how traffic increases affect performance (i.e. response times) depending on the level of oversubscription of VMs per host threads.

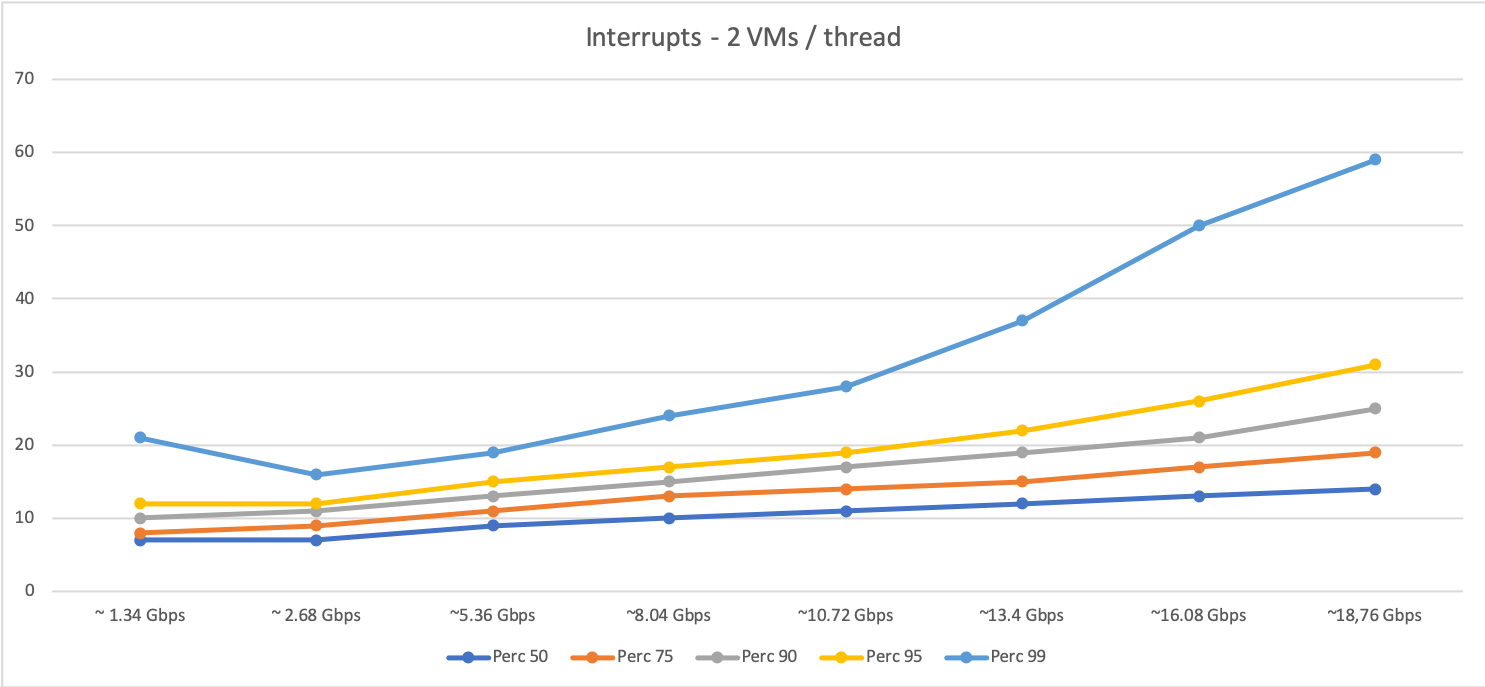

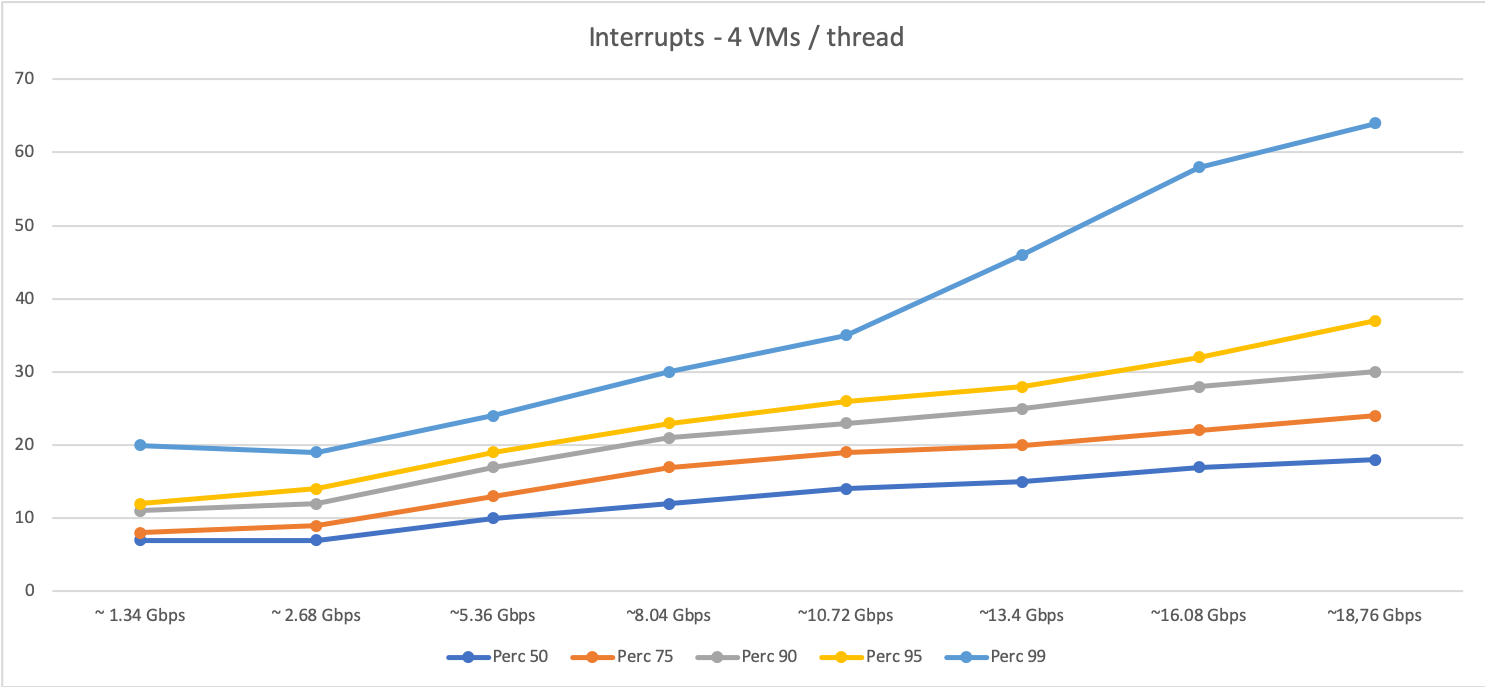

The first two graphs below display the data we got for interrupt-driven traffic (note that the X axis is the amount of traffic managed by the host and the Y axis is the percentile of download sessions in milliseconds):

With 2 VMs per host thread:

…and with 4 VMs per host thread:

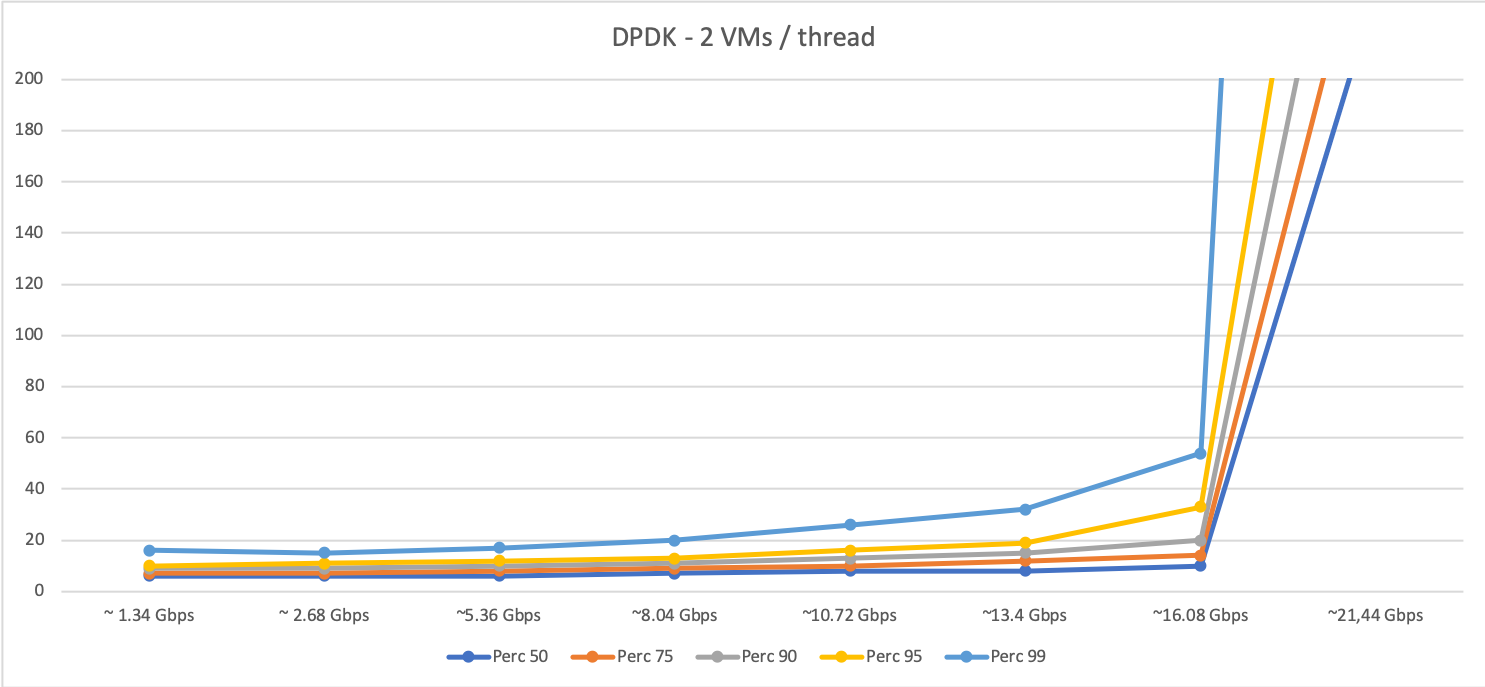

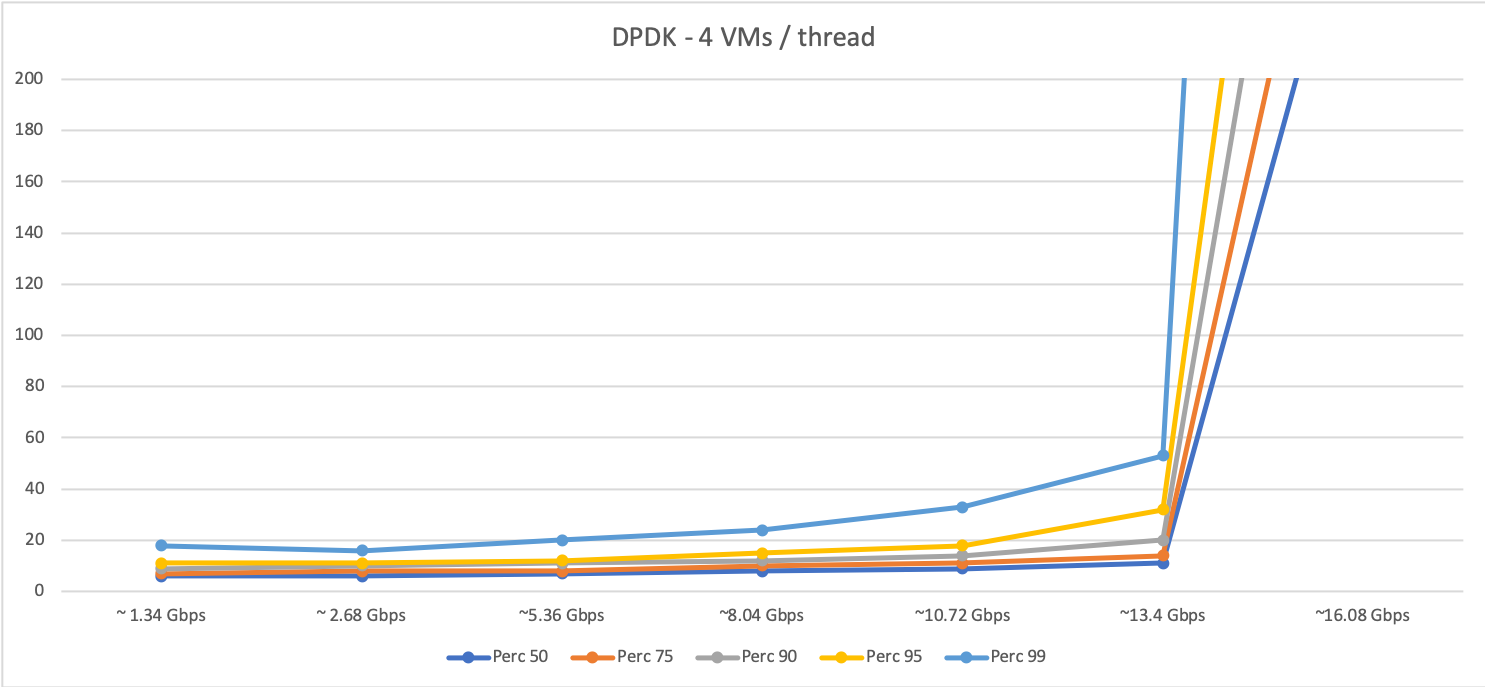

Now, the following two graphs show the data we obtained for DPDK-based traffic (for two VMs and four VMs per host thread, respectively):

As you can see, DPDK behaves in general slightly better than interrupt-driven traffic (faster response times) for the same amount of traffic. However, when traffic increases and a certain level of packets is reached,the system get to a saturation point where the performance degrades quickly. Furthermore, as expected, the saturation point is lower when the oversubscription grows (with four VMs per host thread the system becomes saturated at some point between 8 and 10 Gbps, as shown in the graph).

We can conclude that, as long as traffic doesn’t reach the saturation point, DPDK does help in traffic management in a non-oversubscribed scenario. However, in highly oversubscribed scenarios the interrupt-driven traffic is able to handle traffic better than DPDK and degrades in a smoother way.

The research can continue reducing the amount of kvm_entry and kvm_exit events in the host’s operating system. Maybe OpenNebula’s LXC native support is a good solution for that… you ́ll have to wait for another blog post to find out 😉

Conclusions

We think OpenNebula is a great VIM tool that provides greater simplicity to handle hardware infrastructure in a fast and easy way. It also comes with extensibility as a fundamental design principle, so it’s easy to adapt and include the additional functionality you might require for your particular needs, as was the case with the features described in this post. The response from the OpenNebula engineering team was fantastic, so if you have new features suggestions, don’t hesitate to contact them!

DPDK is a technology that, although having a steep learning curve, allows you, under controlled circumstances, to have the maximum amount of packet processing your hardware can handle while avoiding the interruptions/context switching bottleneck. We feel, though, that the DPDK project should put more effort into developing some debugging tools (or relevant documentation). Sometimes it’s hard to debug some of the issues that come up if you don’t have equivalents to the debugging tools we normally use when carrying out tests and innovation projects like these.

We’d also like to highlight that our test scenario with high density in VMs is quite unusual and, according to our tests, DPDK is a great choice in the most common scenario with low density of VMs.

We’ll keep you posted about our progress in the future, so stay tuned. In the meantime, we hope you found this post about our experience here at Telefónica informative and inspiring. Happy hacking! 🤓

ℹ️ To learn more about how OpenNebula supports Network Functions Virtualization (NFV), download our new white paper on Enhanced Platform Awareness (EPA). To find out how to set up your Edge Cloud with OpenNebula, visit OpenNebula.io/Edge-Cloud

0 Comments